Reliable tracking: Validating Snowplow events using Cypress & Snowplow Micro

At Auto Trader, we have migrated from Google Analytics to Snowplow for event tracking. Our current implementation didn’t focus on the quality or the trustworthiness of the events being tracked. This time around, we focused on those as a first-class concern. In this post, I’ll take you through how we implemented automated data quality checks using Snowplow Micro & Cypress.

What is Snowplow?

Snowplow is a new tracking platform that has promised to revolutionise our data collection and give us the flexibility and freedom to mould the platform to our particular needs.

It works similar to Google Analytics (GA), the tracker fires an event when something occurs on the site, for example, a user clicking a link which takes them to another page. We would typically expect two events. The first would be an interaction with the user interface, the subsequent a page load—we call these events UI Interactions and Page Views respectively.

Contexts & schemas

The main difference between GA and Snowplow is that the latter needs to conform to a schema. A schema is essentially a JSON file that contains a set of rules of what data an event should and can have.

In this example, we will use the page view event—a built-in event from Snowplow that fires automatically when a page is loaded. By default, this event only captures a few bits of data:

- Page URL - the current page URL

- Referrer URL - the URL of the page you came from

- Page name - the name of the current page

Depending on the scenario, you may want to supplement additional data to the event to give it more meaning—Snowplow lets you attach data to events in the form of contexts. This can be a single context or multiple contexts. To understand what these contexts contain, we use schemas.

An example of a schema we created is page_context. It provides information about the page, such as the channel (cars, bikes, vans, etc.), and the page name. We use the page name to identify the page a user is visiting, for example, viewing an advert would produce the page name cars:search:ad-view.

Remember how I said the schemas are a set of rules? If these rules are not adhered to, the event is invalidated and thrown away into the bad-queue—trust me you don’t want to go there, it’s dark and there are no cookies 👿.

Keeping the tracking reliable

Obtaining a new tracking platform comes with many advantages, but we won’t benefit from them if we can’t ensure our tracking is reliable.

Luckily, Snowplow has developed a miniature version of their data pipeline called Snowplow Micro. It enables us to validate the events that we fire in our single-page apps.

Snowplow Micro & Cypress ❄️ 🚜

Snowplow Micro runs in a docker container and connects to our schema registry Iglu where we store our schemas. It allows us to fire events at Micro as you would expect to happen in a production environment, but contained in our pipelines or on a developer’s machine.

On a day-to-day basis, we practise continuous deployment in our development workflow. As we have many single-page apps that are released multiple times a day, we need to ensure that each time these apps are released, tracking is still working as expected.

As part of the continuous deployment process, we have various automated tests—written using Cypress—to verify the application is working correctly. These tests traverse and interact with the site, which in return produces many Snowplow events.

We used this to our advantage by routing these events straight into Snowplow Micro for validation. As it worked so well, we decided to develop our Snowplow testing framework around Cypress and Snowplow Micro.

Snowplow Micro provides us with a collection of endpoints to query. As we will be running this from inside a docker container, the address is /snowplow-micro/micro. The endpoints we can query are:

/all- overall view of the number of good/bad/total events/good- all the good events that have passed validation/bad- all the bad events that have failed validation/reset- clear all of the events and start fresh

Cypress is an outstanding modern testing framework. It’s versatile and most importantly allows us to design custom commands to mould it to our needs.

The solution

We wanted to make testing Snowplow events as effortless as possible, so we divided the framework into two categories; the free stuff and the not-so-free stuff.

The free stuff 🤑

Free means that no additional work is required to benefit from the framework, apart from the initial setup.

Good / Bad-queue validation

The simplest form of creating automated checks would be to verify we have one good event and no bad events after running through the pre-existing Cypress tests. Each time a test runs, we should always receive at least one page_view event that fires when the page loads. If the test includes other interactions, this will increase the event count.

We used the before and after Mocha hooks to:

- Clear Snowplow Micro before each test

- Assert that we have zero bad events and one good event

- Validate contexts

Validating contexts

Contexts are a vital part of Snowplow events—they provide a deep understanding of the application state when an event fires. Therefore we must make sure these contexts are attached to the events as expected.

As part of the after Mocha hook we use, we bundled in automatic context validation for page view events. At the end of each test, we verify that each event contains the required set of contexts. An example that we test for is thepage_context which provides information about the page.

Different apps may require additional contexts in their events. We made it easy for developers to supplement contexts they would like to include in the tests. All a developer would need to do is create a list of contexts and pass it into the after function. The tests will then verify the contents of both the default contexts and the ones the developer has added.

To develop the framework we used TypeScript. It enables real-time insight into what type of argument a function requires—in this instance, it would tell us that the context validation function requires a list of strings to add more contexts to validate.

Not so free stuff 😭

This section focuses on building custom commands in Cypress. Using this, we developed a fluent interface to aid in validating the contents of Snowplow events.

Capturing the event

Cypress allows us to define what they call a Route. It provides us with the ability to observe and capture network activity in our application; each Route has an alias assigned to it for use later. We created a Route to monitor for Snowplow events firing and named it snowplowEvent.

Using the Wait command, we wait for the Snowplow event to fire—using the alias we gave the Route earlier—before continuing. Once the Route has triggered, we query Snowplow Micro for the data it has received instead of the data captured by the Route. The reason for this is that we want Snowplow Micro to validate the event before we verify the data in the event.

We can now create the first part of our custom command getLatestPageViewEvent, which queries the /micro/good endpoint in Cypress to get our validated event back.

Validating the data

By default, Snowplow infers the page name from the HTML title tag. At Auto Trader, we construct the page name using an in-house pattern. In some cases, we dynamically pull data from GraphQL to generate the page name, so we need to make sure it has done so correctly.

When chaining custom commands together, the first command is defined as a parent command, followed by multiple child commands. Cypress can pass along the data produced from one custom command to another in the form of a Subject. The data stored in this subject is the Snowplow Micro event in JSON format.

Using the subject from the parent command getLatestPageViewEvent, we can create our first child command verifyPageName(pageName). This command verifies the page name by comparing the appropriate JSON property in the subject, with the data passed in as an argument to the command.

Similarly to the verifyPageName command, we create our next child command verifyContextData(contextName, expectedData). This command also compares properties in the subject with the data in the arguments. The first argument tells us which context we want to verify, and the second contains the data we are expecting.

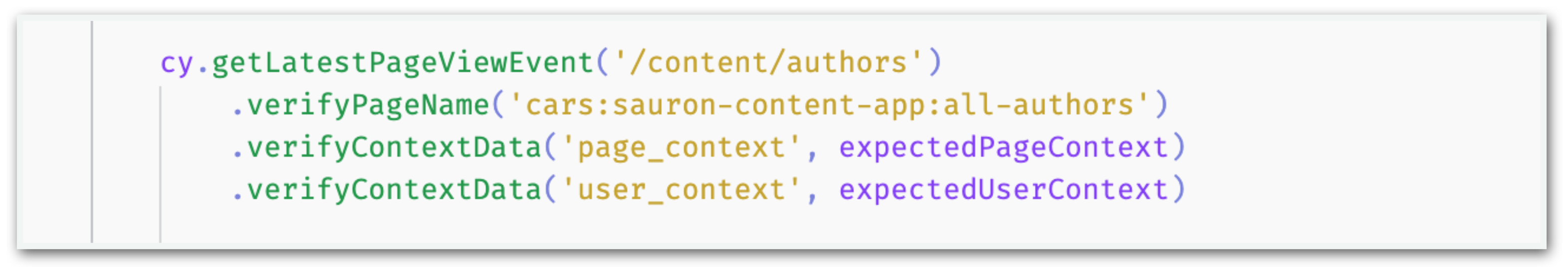

The final result is a chain of multiple commands:

As you can see, we can chain multiple child commands together in any order as long as we start with a parent command. Any failures in the chain will cause the test to fail as you would expect. The use of custom commands to build a testing API ensures it is as straightforward as possible, with very little room for error.

If you are interested in integrating Snowplow Micro with Cypress, check out this sample project to get you started.

Enjoyed that? Read some other posts.